How We Manage On-Prem Infrastructure Access at Scale with Terraform and Teleport

At the Computer Science Research Lab, our team of three administrators is managing the on-premises infrastructure that backs up all research projects.

This infrastructure supports AI, data science, and various computer science projects running across 40+ servers.

And while large-scale infrastructure requires observability, security, and many other considerations, today I want to focus on how we manage access permissions across different environments—Kubernetes clusters, GPU-powered VMs, and FaaS platforms—at scale.

Our approach meets the following key requirements:

Simple onboarding – Easily configure access for new developers.

Centralized access control – Maintain a clear list of permissions across the infrastructure.

Efficient offboarding – Quickly revoke access for inactive developers.

The Challenge

In the beginning, we didn’t have large server capacities, and each laboratory managed its own resources.

But over time, as the projects scaled, the need for more computation grew. Since each laboratory was limited by its budget, the resources began to be centrally managed and shared across all projects.

Our team took over this responsibility, offloading it from the other laboratories and giving them more time to focus on the projects themselves rather than on maintaining on-premises infrastructure.

From an access management perspective, we initially took the straightforward approach of assigning SSH access to individual servers as needed.

But, as you can imagine, this approach didn’t scale well over time.

If we needed to check who had access to a server, we had to log in and manually trace it back through the configured SSH public keys.

The same was true when verifying whether developers were actively using their access or had simply left it idle.

We also wanted to avoid constantly checking Slack or multiple channels where people requested access, as this quickly became disruptive. There was no clear structure for when and why infrastructure access was granted.

More concerningly, we sometimes discovered—only after a month—that someone who had left the project or been fired still had access to critical resources, including expensive GPUs.

It was a mess, and it was only getting worse over time.

The Solution

We weren’t the first to tackle these challenges, and there were already solid solutions like Teleport, Boundary, and others.

After testing various authentication and auditing proxies, we had chosen Teleport.

Not only is Teleport open-source (which we actively support), but its free tier also met all our requirements:

Kubernetes Access – Secure access to Kubernetes clusters without needing

kubectl execor direct SSH access.Certificate-Based Authentication – Eliminates static SSH keys with short-lived SSH certificates.

Audit Logging – Captures access and activity logs for security audits.

OAuth Integration – Authenticate via GitHub.

Two-Factor Authentication (2FA) – Supports OTP-based authentication (e.g., Google Authenticator).

… and more.

While Teleport gave us visibility and control over access permissions, adding or removing permissions was still a manual process, which became increasingly complex as our developer base expanded well over 200 active users.

Also the requests for infrastructure access were still scattered across emails, chat messages, and tickets.

In essence, we still lacked a single source of truth where developers could request access in a structured, auditable way.

We needed a system where access requests were centralized, reviewable, and automatically enforced.

So, we built exactly that.

By leveraging GitLab CI/CD and Terraform, we automated the entire process:

Developer Creates Pull Request (PR)

The developer creates a pull request (PR) to the main branch to request infrastructure access.Infrastructure Changes Are Discussed

Infrastructure changes are discussed and modified within the PR, ensuring that all admins have a say in the process.Merging the PR Triggers the Pipeline

Merging the PR triggers a pipeline that runs Terraform via GitLab CI/CD.

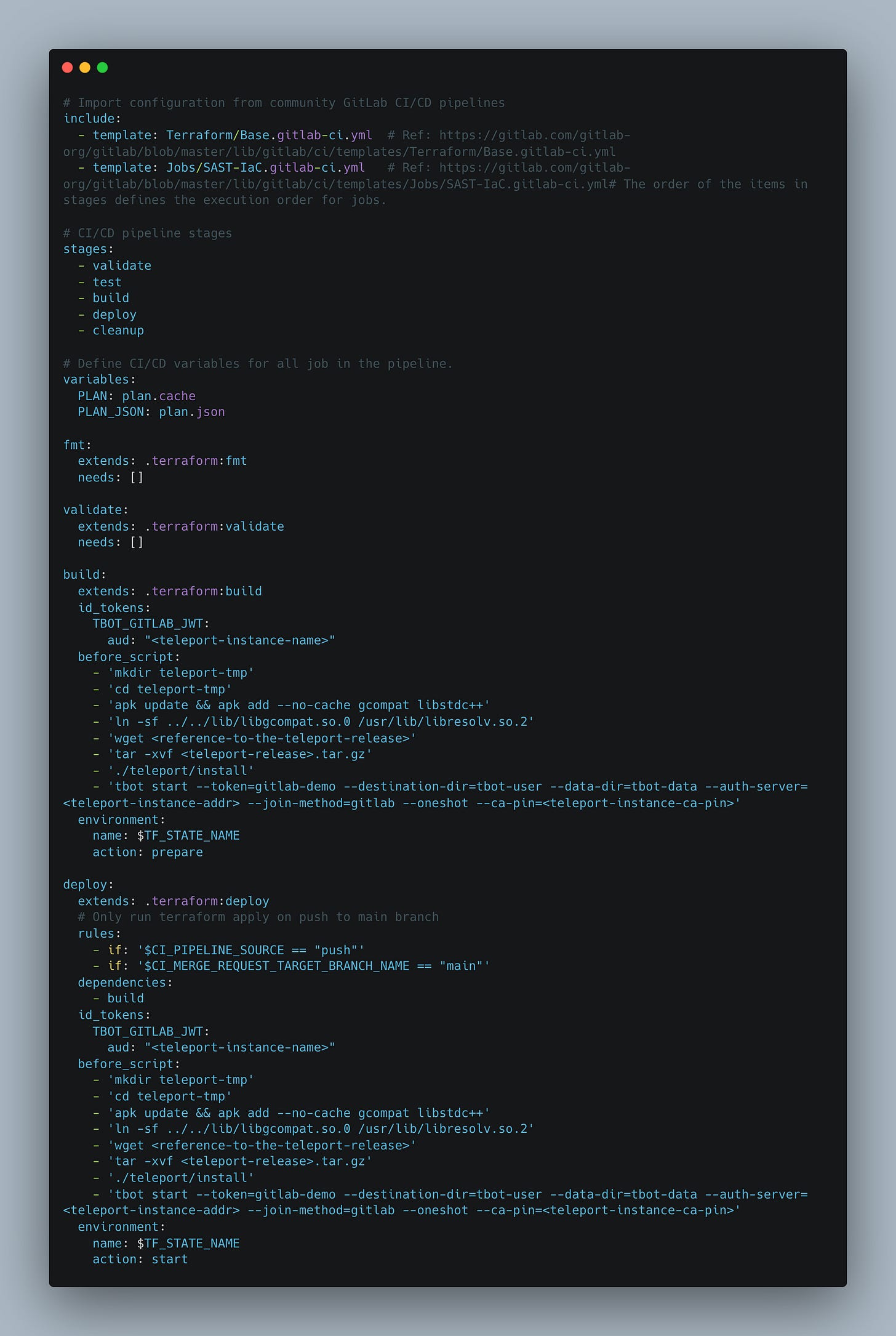

💡 Beside this configuration, you should follow this guide to setup the authentication of the GitLab CI/CD pipeline to the Teleport using Machine ID.

Terraform Script Configures Access

The Terraform script calls Teleport to configure access to the infrastructure.Developer Receives Access Credentials

Last but not least, the developer is provided with the access credentials to the desired infrastructure resource through a PR comment.

💡 Some of the fields are intentionally randomized to protect the specifics of our infrastructure, so make sure to customize them for your setup.

We gave all the developers access to the repository (the main branch was protected), but you might wonder—does this mean the developers now need to learn Terraform?

Not really.

In fact, they don’t have to write any Terraform code.

Instead, we made it easy for them to simply update the values in the YAML file, which is then parsed by the Terraform script (as seen in the example code above).

Here’s an example YAML to give you a rough idea.

That’s it—now we just have to keep an eye on the PRs, which, in any case, we do, and we have a lean history of all the granted and revoked access permissions.

BONUS TIP ⭐️

We actually didn’t stop there.

As the YAML config became more complex than we initially anticipated, we integrated Backstage. Using its Templates, developers could click through the UI, which would automatically generate a pull request in GitLab with the updated YAML.

We also wanted to automate the merging of some PRs, as certain requests didn’t require our approval to grant developers access to parts of our legacy system. To achieve this, we added OPA policies that validated the required changes and automatically approved specific PRs based on predefined requirements.

I’ll dive into this in a future post, so stay tuned!

I hope you find this resource helpful. Keep an eye out for more Cloud updates and developments in next week's newsletter.

Until then, keep 🧑💻-ing!

Warm regards, Teodor